It's been a really fun and challenging year building rocketbotroyale.winterpixel.io. The game is chock-full of some really neat tech and I often get asked questions about how we did X, or how we accomplished Y. For a developer team size of 1 (now 3), I'm pretty proud of what we've been able to put together. We knew we wanted to continue to build online multiplayer games so a big question was what tech platforms we were going to depend on. Having recently been burned by depending on a core product that was eventually killed & sunsetted by Amazon (Gamesparks), I discovered a new appreciation for dependencies that were ultimately under my control.

Godot Game Engine

For the core engine we decided to invest in the Godot Game Engine. It was by far the most mature OSS game engine we could find. The engine itself is written in C++ and compiles to many different platforms (including wasm & WebGL2 for the web). So we knew we would be right at home and able to solve issues should any come up (or so we thought.... more on that in an upcoming post). There were plenty of issues and changes needed, but this ended up being a pretty good foundational choice.

Kubernetes

Building a competitive online multiplayer game means dedicated cloud servers. There's just no other alternative really. People love to cheat, and they will, and to be honest there just isn't anything like the QoS a cloud platform gives you in terms of dependable compute and network resources. To deploy our backend infrastructure we decided to use Kubernetes on DigitalOcean.

There were few key reasons we decided to try Kubernetes:

- With Kubernetes, to a certain degree, we can be cloud agnostic. We deploy almost everything on stock Kubernetes with helm charts, and for the most part we try to keep our cluster as cloud agnostic as possible.

- Provisioning and scaling of our gameservers with the Agones project. If you haven't heard of Agones, it's probably one of my favorite pieces of software I've come across in the past few years. It handles what we were doing manually in my past projects, and once I saw it I knew I wanted to try it out. Spoiler alert: It rocks!

- Tapping into the Kubernetes OSS ecosystem. We didn't quite understand what the value would be at the time, but building things "where the action is" can have it's advantages. We ended up using a lot of existing Kubernetes software as we continuously found there was a package already available for something we ended up needing. Here's the shortlist of packages that we're using in-cluster:

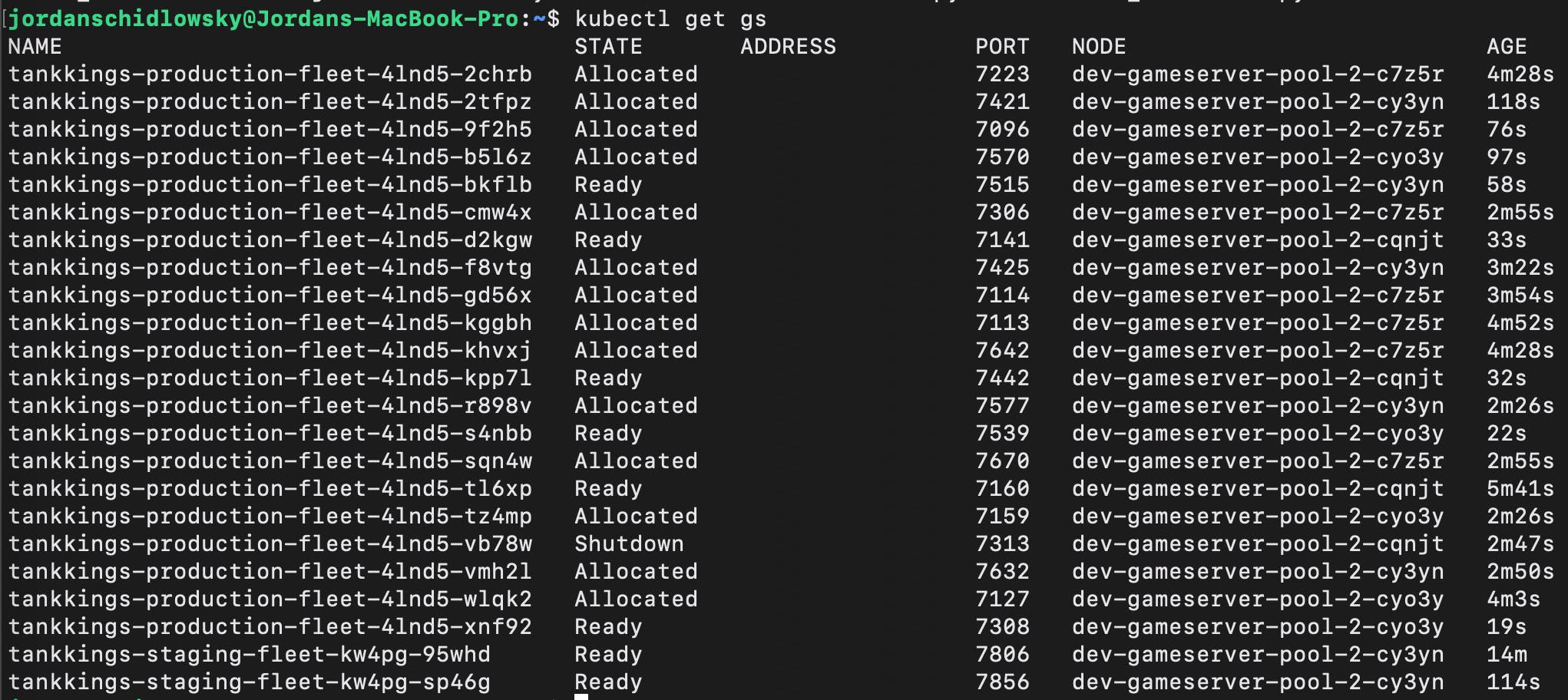

- Agones - Godot gameserver instance orchestration and management. Each gameserver runs on a dedicated auto-scaling node pool specifically reserved for hosting game sessions. Below shows the current state of our godot gameservers in both production and staging environments:

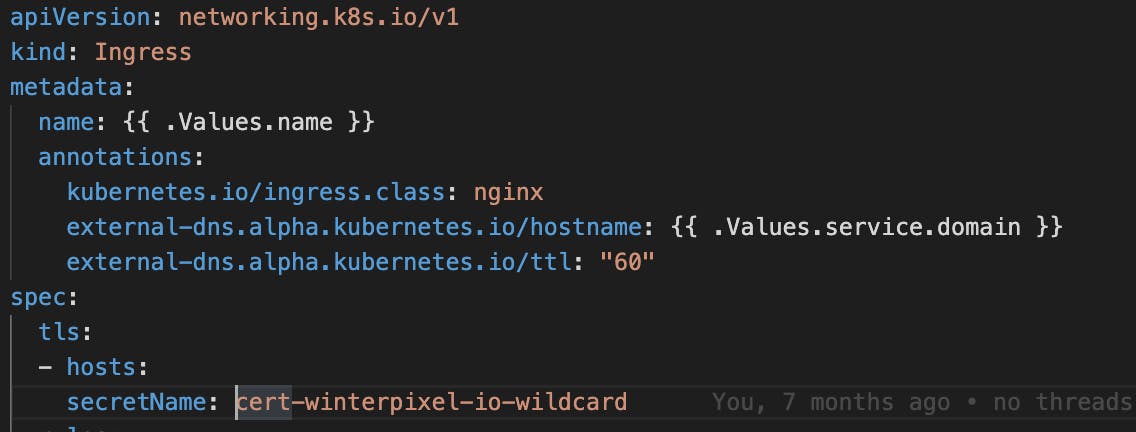

- Cert-manager - Auto provisioning of SSL certificates. Used for websites, matchmaking, gameservers, and other services. This really makes life easy as configuring and provisioning an SSL certificate is as simple as adding an annotation. We use a single wildcard certificate and as such are using the DNS01 challenge method.

- External-DNS - Auto management of DNS entries in our cloud provider's DNS zones. An auto-provisioned wildcard cert, allows us to publically serve any service in our cluster with couple of lines:

- Contour-Ingress - An Envoy based reverse proxy for routing client websockets and other frontend services. The final decision to deploy an Envoy based ingress-controlller (among a few others tried, Nginx, HAProxy, etc), was that is was the ONLY ingress-controller to handle websocket connections properly across configuration reloads. Believe me, we tried tirelessly to get Nginx and subsequently HAProxy to handle long-lived socket connections properly across configuration reloads and it's just not possible. Both peices of software were not built to continue to serve open sockets upon a change in configuration, and both have more-or-less band-aid solutions to handle these persistent connections across a configuration reload. Both Nginx and HAProxy implement a kind of zombified process system that has the existing process hang around serving existing connections while re-configuring and spinning up a new process to handle new incoming connections. This type of design lead to memory increase issues under workloads that required constant reconfiguration (like ours). Envoy seems built to handle this specific scenario, and it seems even the engineering team at Slack eventually came to the same conclusions as well.

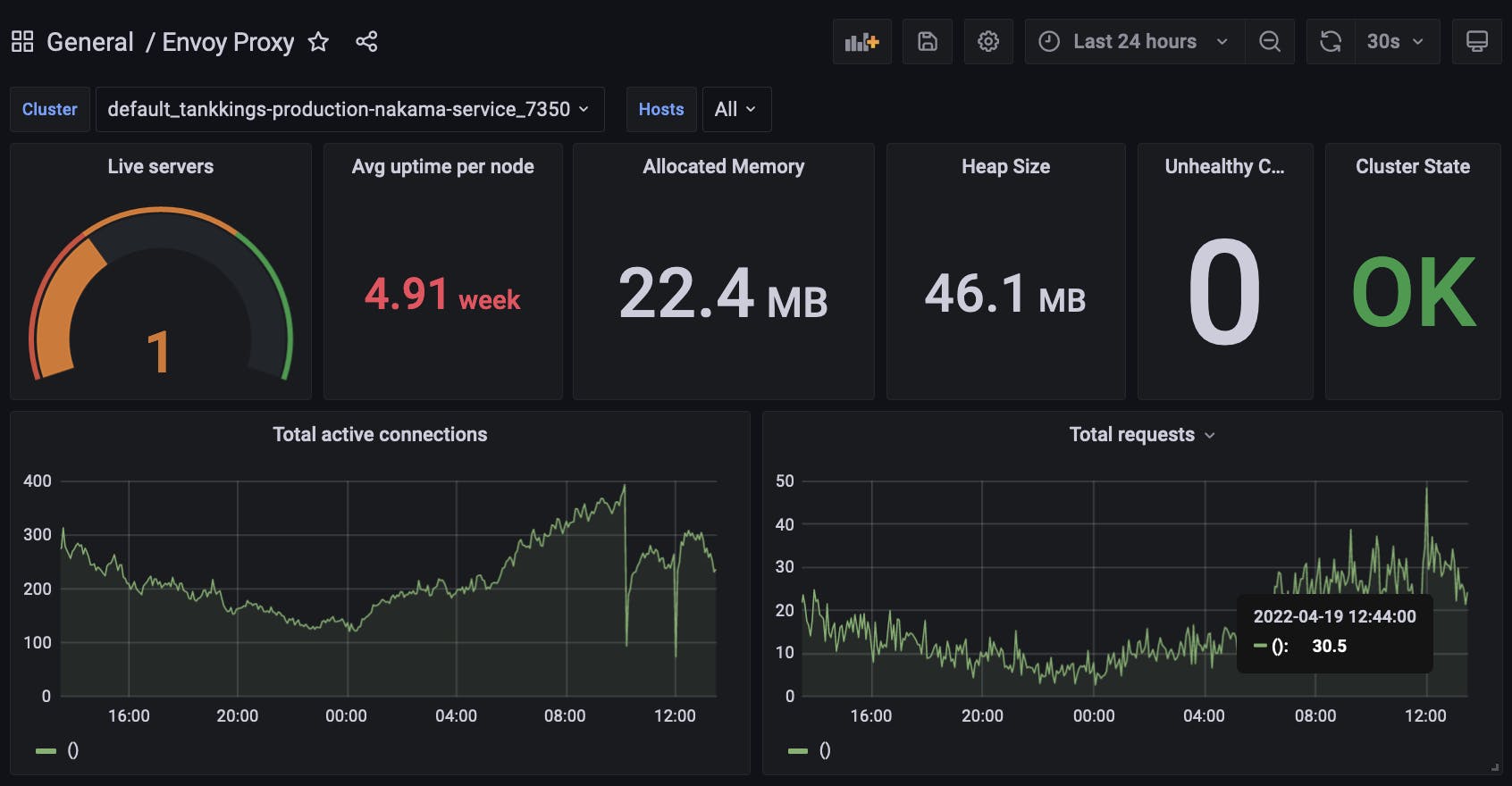

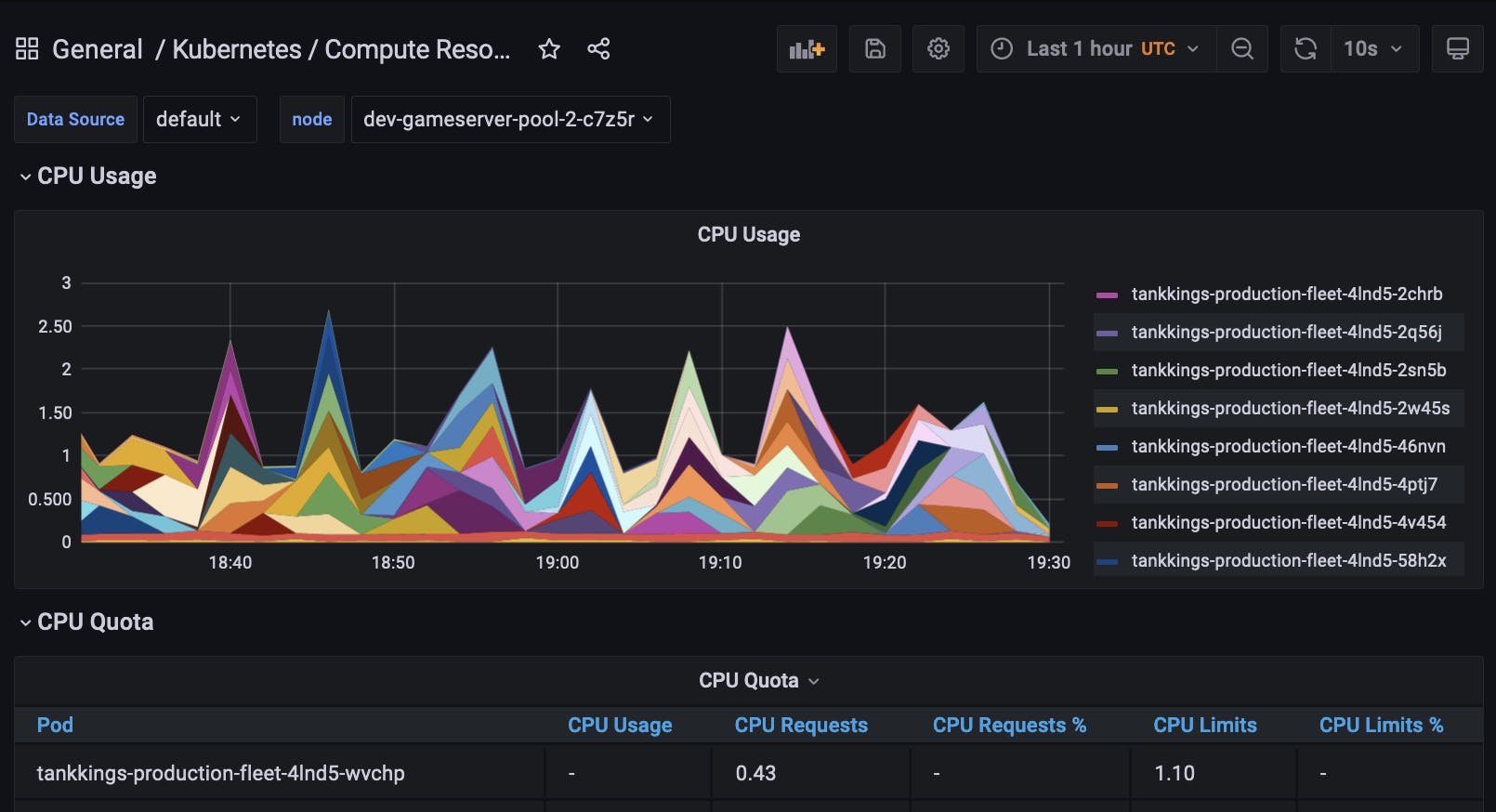

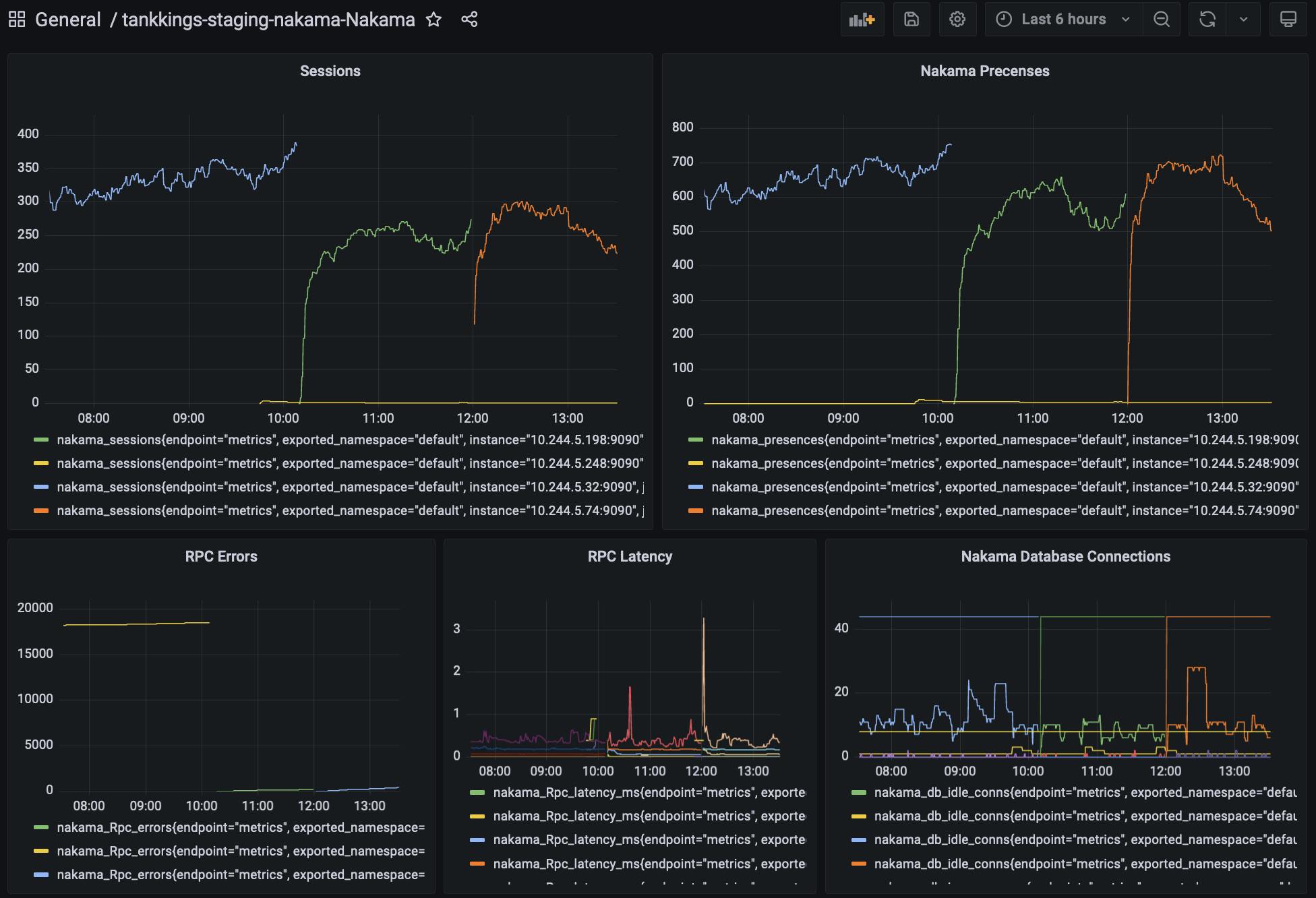

- Prometheus & Grafana - Cluster-wide metrics, analytics and visuals. Shown below is our Envoy proxy, a gameserver node that's hosting game sessions, and a snapshot of our Nakama application:

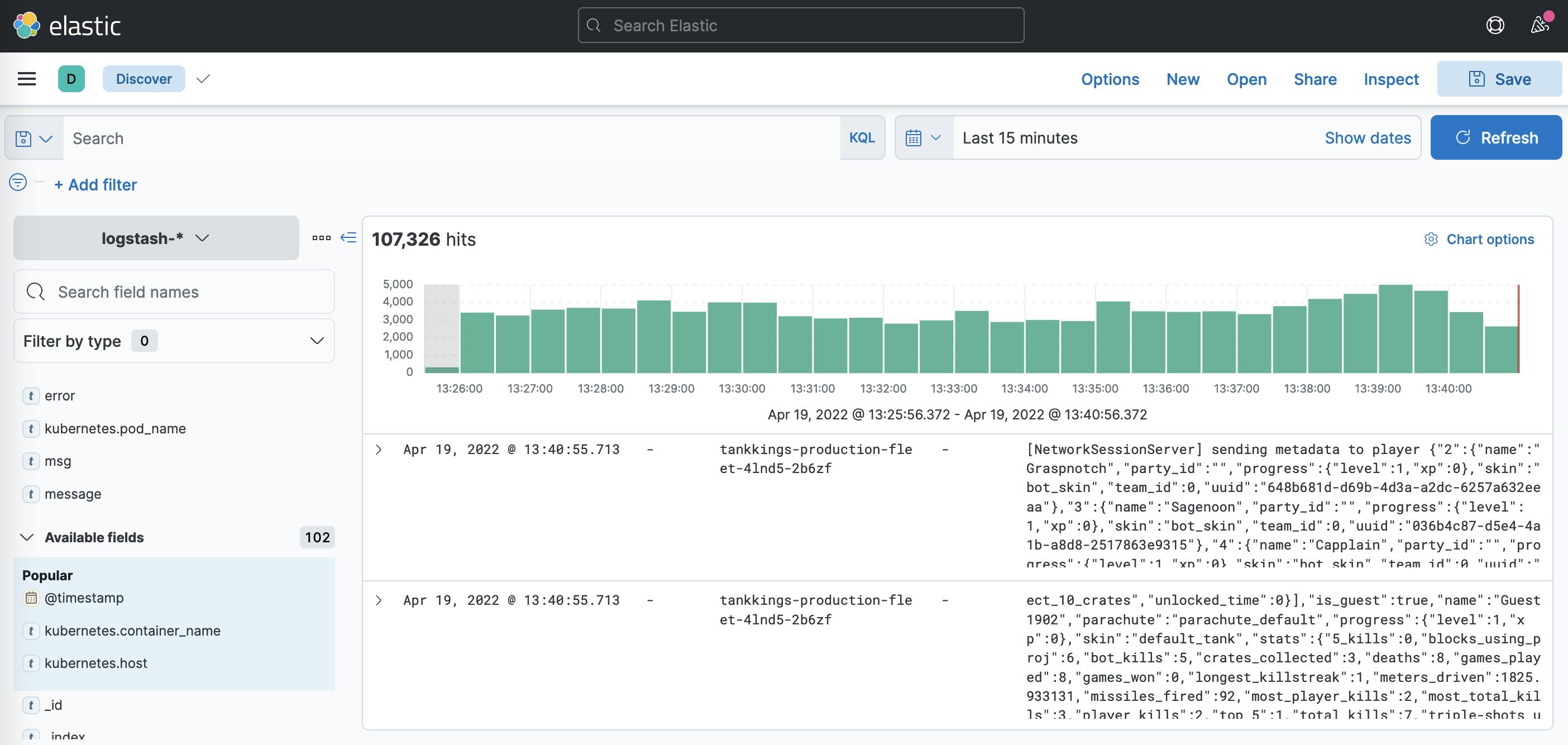

- Elasticsearch & Kibana - Logstash based log aggregation and search. A very important component for searching and filtering our godot based gameserver logs. We use the EFK stack to collect and aggregate cluster logs into elastic:

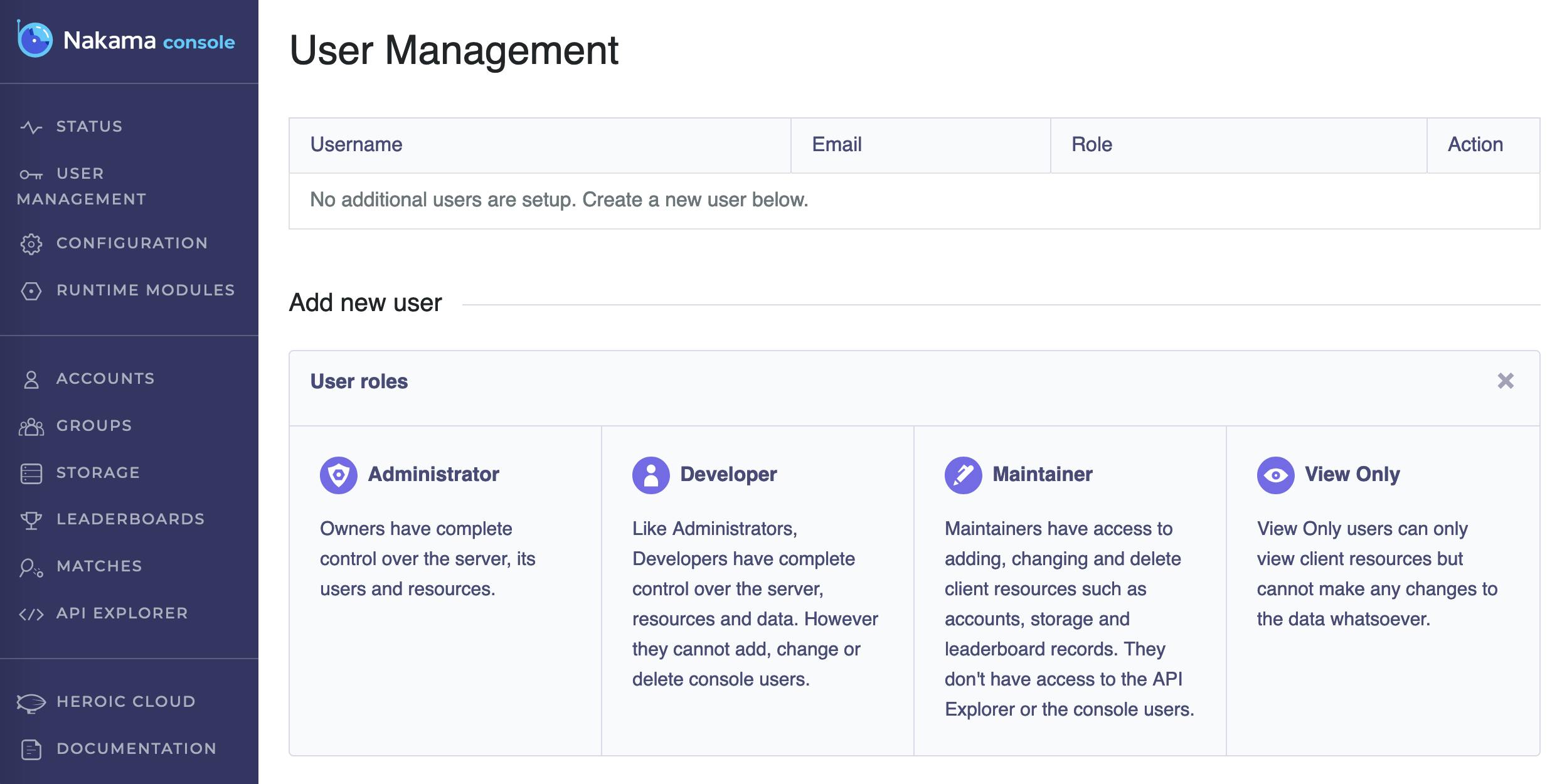

- Nakama - Game backend service. Think user accounts, leaderboards, stats, seasons, etc:

- Nginx - Hosts our static website backends, ex: rocketbotroyale.winterpixel.io

DigitalOcean

We decided to go with DigitalOcean as our cloud vendor. This is a little bit of a secret, but one reason we went with DigitalOcean is egress traffic costs on the big 3 (AWS, GCP, Azure) are 10X what they are on DigitalOcean. Yes, TEN TIMES. On GCP we were consistently paying ~ $0.10/GB egress and in some cases up to $0.12/GB for NorthAmerica->AsiaPac traffic. With any of the big 3 you can estimate paying roughly $0.10/GB egress, whereas on DigitalOcean you will come in around $0.01/GB. Now, granted, the quality of the routes aren't quite as good as the big 3, but you may be hard pressed to notice. And considering egress traffic on GCP was 80% of our cloud bill, it was a no brainer.

I also wanted to use DigitalOcean because cloud services is what they do and only do. I'm starting to favor working with companies and services that aren't continuously looking to integrate into other industry verticals. I don't want my cloud vendor selling ads, books, building autonomous cars, producing tv content, and everything else under the sun. I want their focus on cloud services.

Nakama

Another big decision was what backend to use. In the end we decided to run with Nakama on Postgres and it was fairly trivial to build a helm chart for our Nakama deployment. Nakama supports prometheus monitoring, and it was quite easy integrating it into our cluster. It's also quite obvious that Heroic Labs, (the company behind Nakama), uses Nakama in Kubernetes themselves for their own hosted service.

But going with Nakama was a tough decision, because Supabase was really looking like a great option. In the end we ended up going with something that was built specifically for games. The biggest risk for us when choosing to build on Nakama, is that Nakama is really open-core. Which basically means that the "core" of the software is open source, while other pieces and features are closed and only available behind an enterprise license.

For the most part, Nakama is somewhat transparent about what works in OSS Nakama and what is closed behind their enterprise offering. For example:

The short of it is that you'll find it pretty tough to scale Nakama without using their hosted solution or buying an enterprise license. To be fair, this business model makes a lot of sense for them, and one I would use myself in the same situation. And to their credit they do offer an enterprise license as well as their own hosted solution. The enterprise license is a really nice option for teams like us that want to manage our own application servers. For the time being, we're going to push a single Nakama node as far as we can.

The Whole Package

Overall I'm really happy with the choices we made. Some learning curves yes, but the dependencies we built on are all open-source and well supported. Having such a flexible suite of building blocks will allow us to iterate on the next set of games much faster. Thanks to the many contributors and maintainers of all the aforementioned projects!